Last updated: June 4, 2024

Do you feel overwhelmed by the amount of information online? Having trouble keeping up with trends on social media? How to sort relevant information among this abundance of information?

This is where web scraping comes in. This formidable technique allows you to collect specific data on social networks to identify trends.

In this article, we will reveal to you the secrets of web scraping to follow social media trends. Get ready to discover how this technique can transform the way you consume information and help you be at the forefront of your online world.

What is web scraping?

Le web scraping is a technique that allows collect information from websites automatically. We can imagine a small robot that browses a website and collects all the interesting information it finds there.

For example, to find out how many photos of cats there are on a site, you can send a robot to count the number of photos of kittens and report it.

Web scraping can also be used to collect other types of information, such as:

- The identification data of users, such as usernames, email addresses, phone numbers, etc.

- Product information, such as descriptions, images, prices, reviews, ratings, etc.

- Le social media content, such as posts, likes, comments, shares, etc.

- Research data, such as search results, trends, keywords, hashtags, etc.

- Media, such as images, videos, audio files, etc.

- Financial data, such as stocks, exchange rates, transactions, etc.

Companies often use web scraping to better understand their market and improve their marketing strategy.

How to use web scraping to follow social media trends?

To use web scraping to track social media trends, follow these steps:

1) Identify relevant social networks

First of all, you need to identify the relevant social networks to scrape. Depending on your needs, it may be useful to scrape one or more social networks, such as Facebook, Twitter, Instagram, LinkedIn, etc.

2) Select the data to collect

Once the social networks have been selected, it is important to determine the relevant information to scrape. Indeed, these can be publications, likes, comments, shares, hashtags, etc. It is essential to select information that is related to the data collection objectives.

3) Choose web scraping tools

There are many web scraping tools available in the market. It is important to choose the one that best suits the data collection needs.

Here are some criteria to consider:

- Complexity of websites to scrape.

- Information to collect.

- Necessary features.

- Costs.

- Judicial aspects.

4) Write the scraping code

Once the tool has been chosen, to scrape the desired data, it is often necessary to write specific code. This can be achieved using a programming language such as web scraping with Python, which is particularly suitable for this task.

However, there are also web scraping tools that don't require coding skills, for people who prefer a simpler approach.

5) Scrape data

Now it's time to scrape the data using your chosen web scraping tool. Data can be stored in a database or in a CSV file for later analysis.

6) Analyze the results

Finally, you need to analyze the results to understand trends and insights. You can use data analysis tools such as Excel, Tableau, or tools specific to social media analysis, such as Hootsuite or Sprout Social.

The most used web scraping tools

BrightData

The first tool we recommend is BrightData, a cloud-based data collection platform. Using this tool, you can easily retrieve and analyze structured and unstructured data from millions of websites.

Additionally, Bright Data's flagship tool is the Data Collector, which allows users to extract multiple data from any public website. To do this, it activates ready-to-use web scraping templates.

Furthermore, this platform offers a simple and personalized solution for data extraction. It can automatically collect data from different websites while offering a choice of output formats such as JSON, CSV, Excel or HTML, as well as a varied retrieval method, such as API, email or Google Cloud again.

In short, Bright Data is the perfect tool for efficiently retrieving and analyzing data on the web.

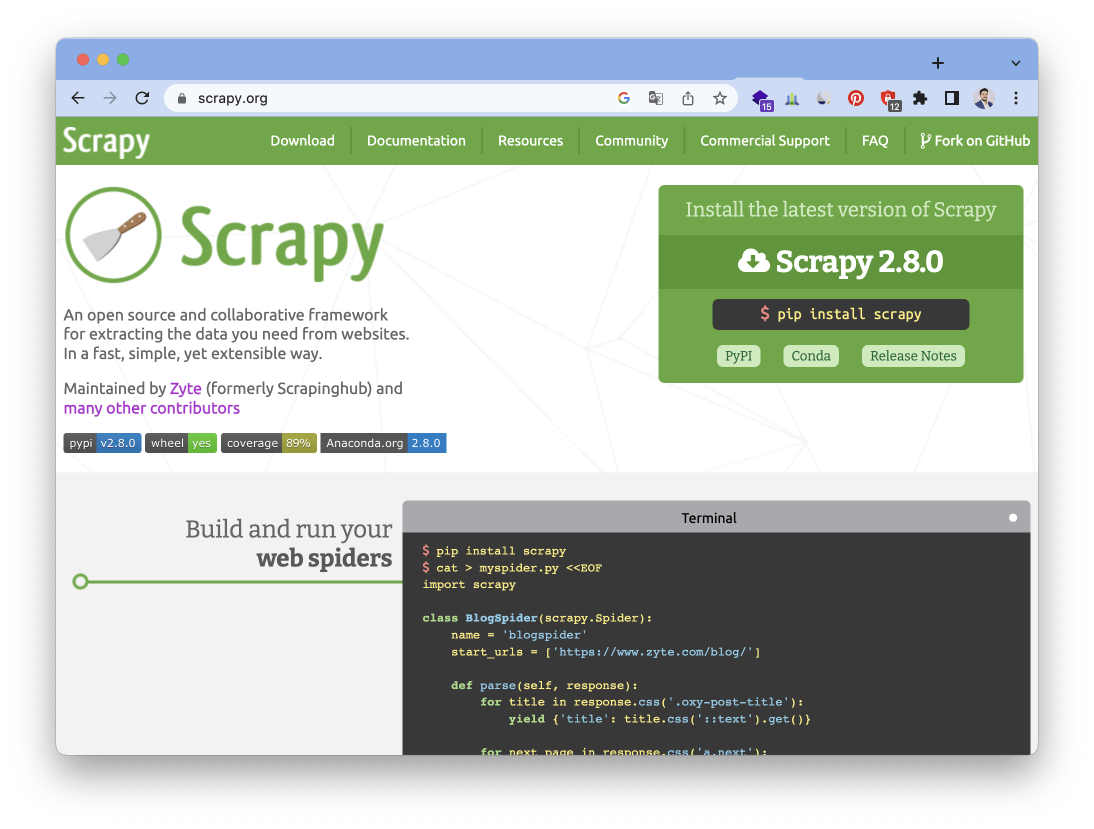

Scrapy

The second tool we present to you is Scrapy, an open source web scraping framework based on Python. Scrapy is specially designed to extract large-scale data from complex websites, such as social networks and e-commerce sites.

Additionally, Scrapy offers many advanced features to make data extraction easier, such as cookie management, connection management, and proxy management. It also allows storing the collected data in different formats, such as CSV, JSON or XML.

WebHarvy

The third tool we present to you is WebHarvy, a desktop web scraping tool that allows you to extract data from websites using a graphical user interface. Unlike the other tools presented previously, WebHarvy is designed for users without programming skills and allows you to scrape data from multiple web pages at once.

Additionally, WebHarvy offers several advanced features such as the ability to solve captchas, use proxies, export data to different formats, and schedule tasks.

The extracted data can be exported to various formats, including CSV, TSV, XML, JSON, SQL and HTML. It is also possible to export data directly to databases such as MySQL, SQL Server and Oracle.

Beautiful soup

If you are looking for a Python-based web scraping tool, Beautiful Soup is a library you should consider. This library allows you to extract data from HTML and XML files by traversing HTML and XML trees to extract specific data, such as tags or attributes.

Additionally, Beautiful Soup offers advanced features to make data extraction easier, such as the ability to search for data based on specific patterns or use regular expressions to extract more complex data.

S

Finally, the last tool we offer you is Selenium, an open source web scraping tool that allows you to control a web browser to extract data from websites.

Unlike other scraping tools, Selenium is commonly used to extract data from dynamic websites, such as social networks or e-commerce sites. Indeed, it allows you to simulate user behavior and interact with elements of the web page, which makes it particularly suitable for extracting data from complex websites.

Selenium also offers the ability to control several different browsers, such as Chrome, Firefox, Safari, and Internet Explorer. This allows users to choose the browser that best suits their needs.

A question ? I am here to help you !